In-Memory Deep Learning Accelerator

Deep learning has shown exciting successes in performing classification, feature extraction, pattern matching, etc. In many real-time applications, machine learning models are typically pre-trained in the cloud and then deployed in edge devices, such as mobile phones and Internet of Things (IoT) devices, for fast and energy-efficient local inference. Because of the very limited computing resource and energy budget, specialized real-time, yet low-power inference hardware is in urgent needs. Leveraging our expertise in analog and mixed-signal designs, we are exploring mixed-signal computing paradigms for deep learning and statistical machine learning models.

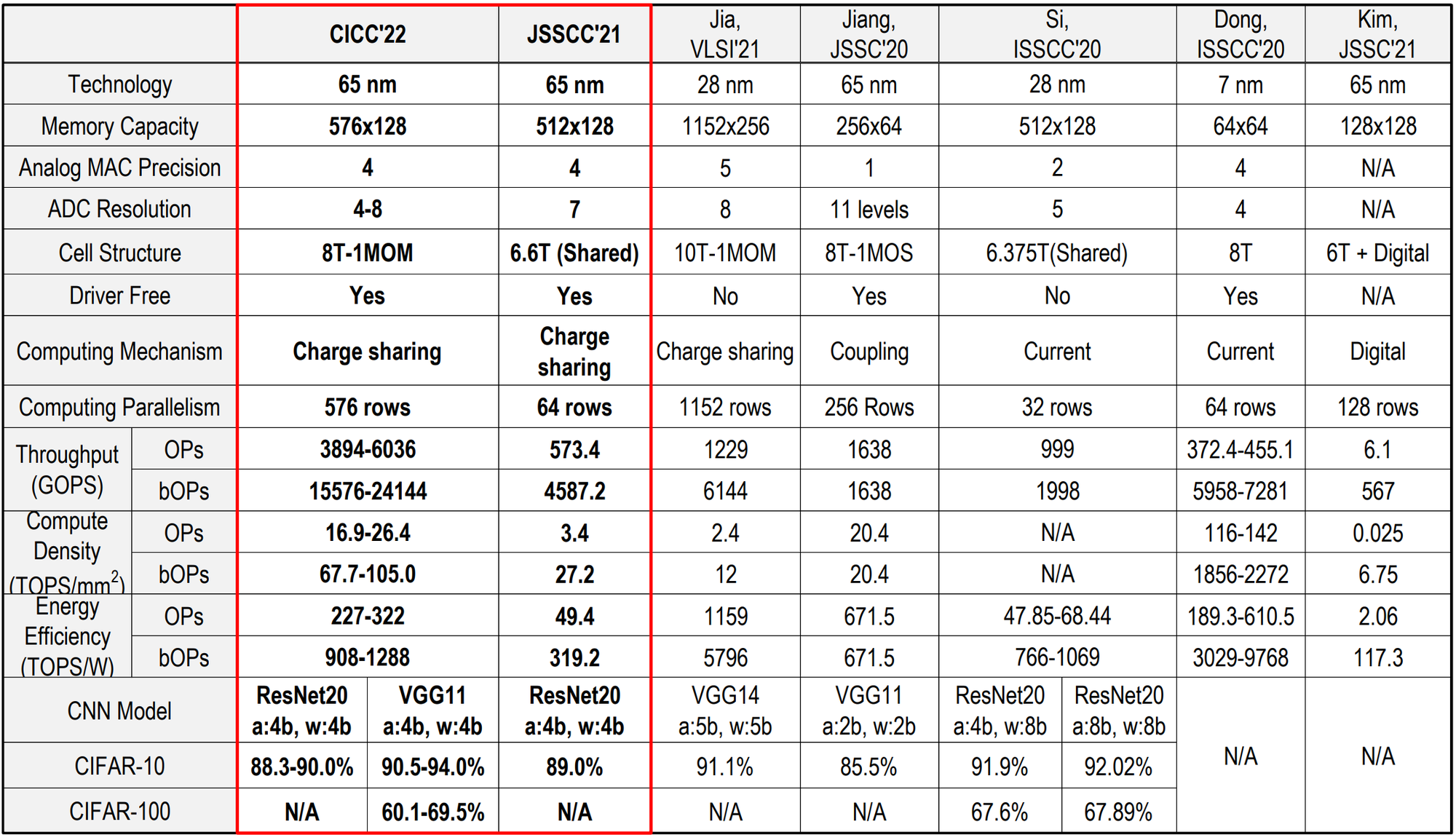

Precise and Programmable In-SRAM Computing [JSSC2021] [ISLPED2021] [CICC2022]

We explore novel circuit topologies to enable accurate and programmable-bitwidth deep learning accelerators and overcome the limitations of existing in-memory computing designs. We are particularly interested in high-performance low-power mixed-signal circuits (DAC, ADC, etc. ) specifically designed for mixed-signal computing systems, which are largely overlooked and optimistically assumed in the literature. Together with our collaborators, we further seek architecture designs and training methods co-optimized with in-SRAM computing circuits.